Netflix: Too Big to Fail?

What one of Netflix's biggest streaming events reveals about their infrastructure

On a night when 60 million households tuned in to watch Mike Tyson face Jake Paul, many found themselves fighting with loading screens instead.

For a company that processes 6 billion hours of streaming content monthly and wrote the book on chaos engineering (they invented the Chaos Monkey, after all), Netflix's Tyson-Paul fight streaming debacle wasn't just embarrassing—it was ironic.

Let's examine the technical challenges and architectural limitations of live streaming at scale.

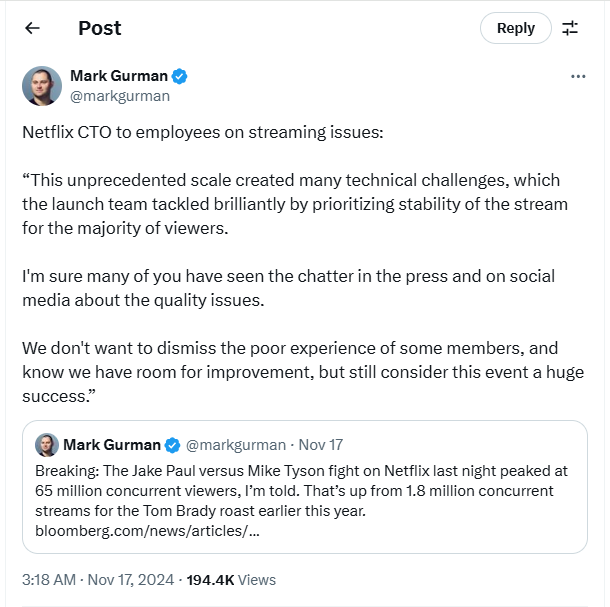

Mike Tyson-Jake Paul fight streaming: what happened

Netflix's much-anticipated Tyson-Paul fight left over 500,000 viewers staring at loading screens and error messages.

They claim 60 million households worldwide tuned in to watch Mike Tyson take on Jake Paul, marking what they called a "record-breaking night"—though not as they had hoped.

The hashtags "#Unwatchable" and "#NetflixCrash" trended on social media throughout the event due to repeated crashes.

(Source)

This wasn't Netflix's first live-streaming event. From Chris Rock's comedy special to the SAG Awards, and from live sports like golf to star-studded shows like the Tom Brady Roast, Netflix has successfully delivered eight live events before this fight.

This wasn't Netflix's first streaming stumble, either. The Love is Blind Live Reunion's technical difficulties last year should have been a warning sign.

However, the scale and stakes of the Tyson-Paul fight were unprecedented for the platform.

The issues were significant enough to prompt a class action lawsuit filed by Ronald "Blue" Denton, alleging that Netflix failed to fulfill its contractual obligations while continuing to bill subscribers despite the service disruptions.

(Source)

Netflix has proven it can stream live content, but the question is whether its infrastructure can handle the massive concurrent viewership that top sporting events demand.

While Netflix's streaming woes during the Tyson-Paul fight might seem unique, similar large-scale streaming failures have become an all-too-familiar story in live sports.

Examples of streaming struggles and success

The FIFA World Cup 2022 reminded us that even established platforms can struggle with massive viewership.

Jio Cinema, which won the streaming rights for India, faced its moment of truth during the opening game between Qatar and Ecuador.

What should have been a triumphant debut turned into a technical nightmare, with viewers experiencing severe lags and crashes during the match.

Despite recovering within a couple of days, the damage to viewer trust was already done, proving there are no second chances at a first impression in live streaming.

Even more telling was the failure of FIFA's official app. During the highly anticipated England vs. Iran match, approximately 500 fans were stranded outside the Khalifa International Stadium due to ticketing system failures.

This situation repeated during the US vs. Wales match, when digital tickets mysteriously "disappeared" from the app, leaving fans unable to access their accounts or transfer tickets.

But some platforms brilliantly managed large-scale live streaming.

Take Hotstar, for example. In the 2019 Cricket World Cup semi-final between India and New Zealand, the platform successfully managed 25.3 million concurrent viewers, handled 1 million requests per second and consumed 10 Tbps bandwidth.

(Source)

Their secret? A robust technical infrastructure called Project Hulk, which combined AWS Lambda with pre-warmed instances and strategic use of Akamai's CDN across eight geo-distributed regions.

Channel 7 also performed flawlessly during the Tokyo Olympics 2020. The Australian broadcaster achieved 100% uptime throughout the games and successfully streamed 4.7 billion minutes of content.

Their approach focused heavily on comprehensive observability tools and a deep understanding of viewer behavior patterns, proving that success in live streaming is about anticipating and adapting to viewer needs in real-time.

So, what went wrong with Netflix?

Netflix remains silent about the specific technical issues in the Tyson-Paul fight. But we can make an educated guess on some factors that may have contributed to the failure.

At the heart of the issue seems to be the fundamental architectural challenge. While robust for on-demand content, Netflix's infrastructure was initially designed and optimized for a different kind of streaming.

The platform's architecture excels at delivering movies and TV shows but faces challenges when handling millions of viewers watching the same content simultaneously.

Another factor could be Netflix's reliance on its proprietary Content Delivery Network (CDN), Open Connect. While this system has served Netflix admirably for years in delivering on-demand content, live streaming is a different beast.

When traffic spikes beyond anticipated levels and caches are exhausted, the absence of fallback options becomes critical.

The peering network constraints during peak viewership likely compounded these issues.

When millions of viewers simultaneously request the same content, the interconnections between different networks can become overwhelming. Without redundant pathways or alternative routes, these bottlenecks can impact the end-user experience.

Unlike on-demand content, where loading delays are inconvenient but manageable, every second of lag in live streaming can mean missing a remarkable moment.

The technical requirements for delivering smooth, real-time content to millions of concurrent viewers demand not just robust infrastructure but specific expertise in live event streaming – something that typically develops through multiple iterations and years of experience.

Btw, enjoy the turkey, the tech, and the time with loved ones—Happy Thanksgiving!

Here are some of the insightful editions you may have missed: