Amazon Cuts 90% Infra Cost by Going Monolith

Amazon’s Prime Video saved big by using a monolith. So should we all go back to monolithic architectures?

High cloud infrastructure costs bother most teams.

Some of it stems from wasteful spending like unused database instances, logging apps left turned on, etc.

But most of the time, it’s poor app architecture.

Amazon’s Prime Video team encountered a similar problem. And they dealt with it by modifying the architecture.

But their case study stirred up the monolith vs. microservices debate yet again.

Here’s what happened.

Troubled by high cloud infra costs

The Video Quality Analysis (VQA) team at Prime Video set up a tool to monitor every stream for quality issues.

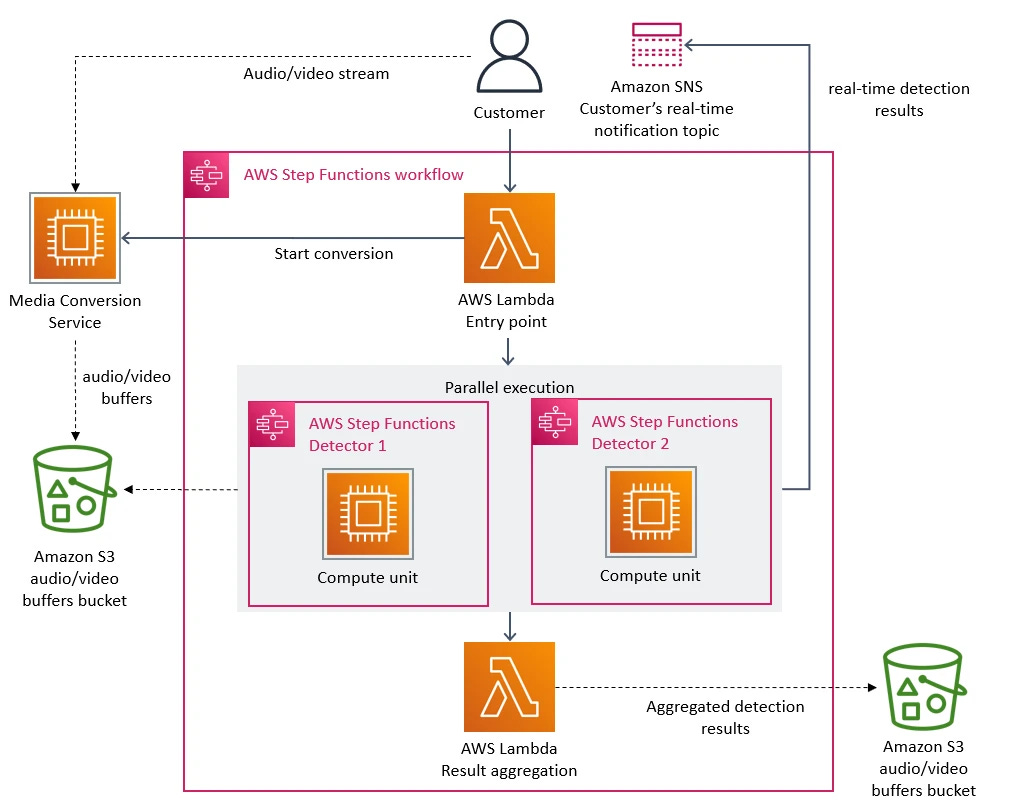

The tool architecture was a distributed serverless system with multiple components. It consisted of a control plane and a data plane along with the following components.

Media Converter: A microservice responsible for converting input audio/video streams into frames or decrypted audio buffers that were sent to detectors.

Defect Detectors: These were separate microservices that executed algorithms to analyze frames and audio buffers in real-time, looking for defects such as video freeze, block corruption, or audio/video synchronization problems. They downloaded images and processed them concurrently using AWS Lambda.

Orchestration: Implemented via AWS Step Functions, this component controlled the flow and managed the state transitions within the service.

Input Stream Processing: A microservice that split videos into frames, temporarily uploading images to an Amazon S3 bucket for defect detectors to process.

Customer Request Handling: A Lambda function that managed customer requests and forwarded them to relevant step functions executing detectors.

Results Storage: Aggregated results were stored in an Amazon S3 bucket.

Source: Prime Video tech blog

The solution was easy to set up and worked well at the beginning. However, as Prime Video catered to a larger audience, the team encountered two major challenges.

High infrastructure cost: The serverless architecture, comprising AWS Step Functions, AWS Lambda, and Amazon S3, was becoming very expensive when scaled to thousands of streams. The cost of state transitions in AWS Step Functions and the high number of Tier-1 calls to the S3 bucket contributed to the high cost.

Scaling bottlenecks: While onboarding more streams, the team noticed that they were hitting a hard scaling limit at around 5% of the expected load. The main scaling bottleneck was the orchestration management implemented using AWS Step Functions, which resulted in reaching account limits due to multiple state transitions for every second of the stream.

The solution needed architectural changes

To overcome these challenges, the team took a step back and reevaluated their existing architecture.

They rearchitected their defect detection system by moving from a distributed, serverless architecture to a monolithic architecture.

The new setup featured all components running within a single process, deployed using Amazon Elastic Compute Cloud (Amazon EC2) and Amazon Elastic Container Service (Amazon ECS) instances. Here's an overview of the new architecture:

1. Control and Data Plane: All components now run within a single ECS task, eliminating the need for network-based control and reducing data sharing overhead.

2. In-Memory Data Sharing: By integrating all components into a single process, data transfer happens directly in-memory instead of using Amazon S3 as intermediate storage for video frames, reducing costs and latency.

3. Simplified Orchestration: The need for AWS Step Functions was eliminated, and orchestration logic was simplified since components were controlled within a single instance.

4. Vertical Scaling: Detectors now scale vertically as they all run within the same instance, with some horizontal scaling achieved by cloning the service multiple times and parameterizing each instance with a different subset of detectors.

5. Lightweight Orchestration Layer: Added to distribute customer requests among the multiple instances running different detectors.

6. Results Storage: The final results are still stored in an Amazon S3 bucket, but separate results for each detector are also stored in the S3 bucket.

Source: Prime Video tech blog

The new monolithic architecture significantly reduced infrastructure cost, increased scaling capabilities, and allowed the Prime Video team to monitor all streams viewed by their customers for better video quality and an enhanced customer experience.

The move saved more than 90% in terms of infrastructure costs for the Prime Video team.

Wasn’t really a monolith vs. microservices thing

When they featured this case study in their tech blog, the monoliths vs microservices debate reignited.

Tech world was raving about the monolithic architecture, and rightly so. 90% cost-saving was a remarkable feat.

According to many, the irony was that Prime Video saved infra costs after ditching AWS Serverless.

But this instance was more about choosing the right architecture for the specific application. Prime Video didn’t abandon microservices architecture but moved one of their services to a better suited architecture.

In fact, it was a right decision to go serverless at first since it was easier and quicker to set up. But as the system scaled, high cost per consumption made it a less feasible option.

Prime Video isn’t a monolith now. It’s still a microservices application with one of the services refactored like a monolith.

And they showcased a great example of how to use the right tool for the right job at the right time.